Error - It is the mistake done by the human being

Defect - If the human being performs an error, then a defect is produced in his code/document

Failure - A defect in a system would cause the system to fail / not function accordingly. This leads to a failure of the system. Some defects may not lead to failure. The reasons for failure may not only be a defect. It can be an environmental issues also (such as server problems, magnetism etc)

Testing is essential because:

- Its a measure of quality of software (in terms of defects found)

- To meet Legal/Industry specific standards

- To reduce the overall risk of the product

- Gives confidence in the quality of the software (by finding less or no defects)

- Helps in finding the Root Causes of defects , hence will help in developing preventive measures, processes for improvement.

- To decide whether the development would go to the next phase - Provide information for Decision making

- Maintanence/Regression testing is essential to make sure that no new defects are introduced into the system.

Testing includes the following processes:

- Planning and Control

- Choosing test conditions

- Designing and Executing test cases

- Checking results

- Reporting the Test

- Evaluating Exit Criteria

How to prevent defects?

- Sharing the Early Test design with development

- With Root cause analysis done on the previous development cycles

Testing Levels:

1. Component / Unit Testing

2. Integration Testing

3. System Testing

4. Acceptance Testing

Testing Types:

1. Functional Testing - includes Security testing and Interoperability Testing

2. Non Functional Testing - ex: Performance, Load, Stress, Usability, Maintainability, Reliability and Portability - (basically, non functional testing is "how" the system works)

3. Structural / Architectural Testing - includes white box testing

4. Retesting , Regression Testing

Static Testing:

Types of Reviews:

1. Informal Review - no process followed

2. Walkthrough - The reviewer presents his piece of work to the other members. This is meant for knowledge sharing, but defect discussion is also welcome.

3. Technical Review - The formal review of presenting the piece of work to the team in technical terms. Meant for making decisions, evaluating alternatives, finding defects, solving technical problems, confirming to rules, procedures, plans, regualations.

4. Inspection - the most formal review with a view of finding defects. Follows various checklists and rules, an inspection report is sent at the end, has a definite entry/exit criteria.

Example below shows that even the pin entered is incorrect, it would accept again for the first 2 times but the third time, it would block the card. Hence, the previous output/state of the system is considered here.

It has 3 levels:

1. Component level - structure of a software component (statements, decisions, branches, distinct paths)

2. Integration level - call tree diagram (in which modules call other modules)

3. System level - menu structure/business process or web page structure

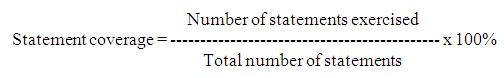

Statement coverage: The statement coverage is also known as line coverage or segment coverage. It covers only the true conditions. In this process each and every line of code needs to be checked and executed.

Decision coverage: Decision coverage also known as branch coverage or all-edges coverage. It covers both the true and false conditions unlikely the statement coverage.

100% decision coverage guarantees 100% statement coverage, but not the other way around.

Consider the code:

1 READ A

2 READ B

3 C = A – 2 *B

4 IFC <0THEN

5 PRINT “C negative”

6 ENDIF

The value of C is -10, so the condition ‘C < 0′ is True, so we will print ‘C negative’ and we have executed the True outcome from that decision statement. But we have not executed the False outcome of the decision statement. What other test would we need to exercise the False outcome and to achieve 100% decision coverage?

Experience Based technique - based on the knowledge of testers, developers, users and other stakeholders about the software. Error guessing is the most commonly used Experience based technique.

Exploratory testing is about exploring, finding out about the software, what it does, what it doesn’t do, what works and what doesn’t work. This is an approach that is most useful when there are no or poor specifications and when time is severely limited. It is a hands-on approach in which testers are involved in minimum planning and maximum test execution.

Defect - If the human being performs an error, then a defect is produced in his code/document

Failure - A defect in a system would cause the system to fail / not function accordingly. This leads to a failure of the system. Some defects may not lead to failure. The reasons for failure may not only be a defect. It can be an environmental issues also (such as server problems, magnetism etc)

Testing is essential because:

- Its a measure of quality of software (in terms of defects found)

- To meet Legal/Industry specific standards

- To reduce the overall risk of the product

- Gives confidence in the quality of the software (by finding less or no defects)

- Helps in finding the Root Causes of defects , hence will help in developing preventive measures, processes for improvement.

- To decide whether the development would go to the next phase - Provide information for Decision making

- Maintanence/Regression testing is essential to make sure that no new defects are introduced into the system.

Testing includes the following processes:

- Planning and Control

- Choosing test conditions

- Designing and Executing test cases

- Checking results

- Reporting the Test

- Evaluating Exit Criteria

How to prevent defects?

- Sharing the Early Test design with development

- With Root cause analysis done on the previous development cycles

Testing Levels:

1. Component / Unit Testing

2. Integration Testing

3. System Testing

4. Acceptance Testing

Testing Types:

1. Functional Testing - includes Security testing and Interoperability Testing

2. Non Functional Testing - ex: Performance, Load, Stress, Usability, Maintainability, Reliability and Portability - (basically, non functional testing is "how" the system works)

3. Structural / Architectural Testing - includes white box testing

4. Retesting , Regression Testing

Static Testing:

- Uses manual examinations (reviews) to find defects well before the execution of the code.

- Any software work can be reviewed, ex: Requirement Specifications, Design Specifications, Code, Test plan, Test cases, Test scripts, user guides or web pages.

- Typical defects found during reviews: deviations from standards, requirement defects, design defects, insufficient specifications

- Reviews will catch hold the reasons for defects(which would be found during dynamic testing) rather than defects itself.

Types of Reviews:

1. Informal Review - no process followed

2. Walkthrough - The reviewer presents his piece of work to the other members. This is meant for knowledge sharing, but defect discussion is also welcome.

3. Technical Review - The formal review of presenting the piece of work to the team in technical terms. Meant for making decisions, evaluating alternatives, finding defects, solving technical problems, confirming to rules, procedures, plans, regualations.

4. Inspection - the most formal review with a view of finding defects. Follows various checklists and rules, an inspection report is sent at the end, has a definite entry/exit criteria.

Test Design Techniques:

Black Box technique/ specification based technique - without knowing the code, based on test basis documentation, does not use any information regarding the internal structure of the component which is being tested

- Equivalence Partitioning - Dividing the inputs to the software system into groups that are expected to exhibit similar behavior. - including both valid and invalid data

- Boundary Value Analysis - Testing at the boundaries of each equivalence partition

- Decision table testing - Based on business logics/rules out of which a simple decision table (cause-effect table) is made. Example of a decision table is as follows:

Conditions Rule 1 Rule 2 Rule 3 Rule 4

Repayment amount has T T F F

been entered:

Term of loan has been T F T F

entered:

Repayment amount has T T F F

been entered:

Term of loan has been T F T F

entered:

Actions/Outcomes

Process loan amount: Y Y N N

Process term: Y N Y N

Process loan amount: Y Y N N

Process term: Y N Y N

- State Transition Testing - Testing the software in terms of its various states, transitions between states, the inputs/events that trigger state changes and the actions which may result from those transitions. Assumption - system goes through finite number of states

Example below shows that even the pin entered is incorrect, it would accept again for the first 2 times but the third time, it would block the card. Hence, the previous output/state of the system is considered here.

- Use Case Testing: Tests are derived from use cases (interactions between actors). Useful for designing Acceptance level test cases.

White box test design technique/ Structure based technique - based on the analysis of the structure of the component/system

It has 3 levels:

1. Component level - structure of a software component (statements, decisions, branches, distinct paths)

2. Integration level - call tree diagram (in which modules call other modules)

3. System level - menu structure/business process or web page structure

Statement coverage: The statement coverage is also known as line coverage or segment coverage. It covers only the true conditions. In this process each and every line of code needs to be checked and executed.

Consider code sample

READ X

READ Y

I F X>Y THEN Z = 0

ENDIF

READ Y

I F X>Y THEN Z = 0

ENDIF

To achieve 100% statement coverage of

this code segment just one test case is required, one which ensures that

variable A contains a value that is greater than the value of variable

Y, for example, X = 12 and Y = 10. Note that here we are doing

structural test design first, since we are choosing our input values in order ensure statement coverage.

Decision coverage: Decision coverage also known as branch coverage or all-edges coverage. It covers both the true and false conditions unlikely the statement coverage.

100% decision coverage guarantees 100% statement coverage, but not the other way around.

Consider the code:

1 READ A

2 READ B

3 C = A – 2 *B

4 IFC <0THEN

5 PRINT “C negative”

6 ENDIF

Let’s suppose that we already have the following test, which gives us 100% statement coverage for code sample 4.3.

TEST SET 2 Test 2_1: A = 20, B = 15The value of C is -10, so the condition ‘C < 0′ is True, so we will print ‘C negative’ and we have executed the True outcome from that decision statement. But we have not executed the False outcome of the decision statement. What other test would we need to exercise the False outcome and to achieve 100% decision coverage?

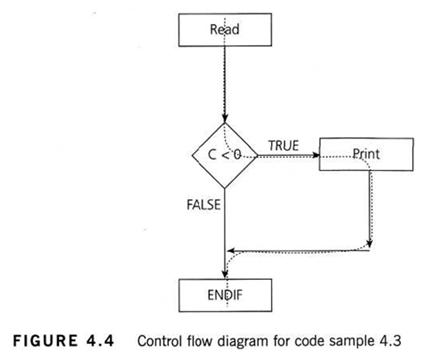

Before we answer that question, let’s

have a look at another way to represent this code. Sometimes the

decision structure is easier to see in a control flow diagram (see

Figure 4.4).

The dotted line shows where Test 2_1 has

gone and clearly shows that we haven’t yet had a test that takes the

False exit from the IF statement.

Let’s modify our existing test set by adding another test:

Let’s modify our existing test set by adding another test:

TEST SET 2

Test 2_1: A = 20, B = 15

Test 2_2: A = 10, B = 2

Test 2_1: A = 20, B = 15

Test 2_2: A = 10, B = 2

This now covers both of the decision

outcomes, True (with Test 2_1) and False (with Test 2_2). If we were to

draw the path taken by Test 2_2, it would be a straight line from the

read statement down the False exit and through the ENDIF. We could also

have chosen other numbers to achieve either the True or False outcomes.

Experience Based technique - based on the knowledge of testers, developers, users and other stakeholders about the software. Error guessing is the most commonly used Experience based technique.

Exploratory testing is about exploring, finding out about the software, what it does, what it doesn’t do, what works and what doesn’t work. This is an approach that is most useful when there are no or poor specifications and when time is severely limited. It is a hands-on approach in which testers are involved in minimum planning and maximum test execution.

Types of Tools used in testing (Based on the test activities that they support)

1. Tools supporting Management of testing and tests:

- Test Management Tools: Provides interfaces for executing tests, tracking defects, managing requirements, and reporting of the test objects.

- Requirement Management Tools: Stores the Requirement Statements, attributes for the requirements. Also support tracing of the requirements to individual tests.(Requirement traceability)

- Incident Management Tools: Store and manage incident reports. i.e defects, failures, change requests, etc. Also supports statistical analysis of the above

- Configuration Management Tools: Used for version management/control of the testware and related software

2. Tool support for Static Testing:

- Review Tools: Assist with review processes, guidelines, checklists and are used to store and communicate review comments and report on defects and effort

- Static Analysis Tools (D): Helps developers and testers to find defects before dynamic testing by providing support for enforcing coding standards, analysis of structures and dependencies. Also help in providing metrics of the code like complexity.

- Modelling Tools (D): Used to validate software models (ex: physical data model for a relational database). These tools also help in generating some test cases based on the model.

3. Tool support for Test Specification:

- Test Design Tools: Used to generate executable tests or test inputs from the requirements, graphical UI's, design models, or code.

- Test Data Preparation tools: These tools manipulate the databases, files or data transmissions to set up test data to be used during the execution of the tests.

4. Tool support for Test Execution and Logging:

- Test Execution Tools: Enables tests to be executed automatically, or semi automatically, using stored inputs and expected outcomes through the use of scripting language and provides a test log for each run.

- Test Harness/ Unit Test Framework Tools (D): These tools facilitate the testing of components or parts of a system by simulating the environment in which the test object will run through the use of stubs/drivers.

- Test Comparators: Determines the differences between files, databases or test results. They typically use dynamic comparators, but post execution comparison may be done by a separate tool.

- Coverage Measure tools: Through intrusive or non intrusive means measure the percentage of specific types of structures (statements, branches, conditions etc) by a set of tests

- Security Testing Tools: Used to evaluate the security characteristics of the software.

5. Tools for Performance and Monitoring:

- Dynamic Analysis Tools (D): Finds defects when the software is executing (like time dependencies, memory leaks

- Performance testing/Load testing/ Stress testing: These tools monitor and report on how a system behaves under a variety of simulations like no. of concurrent users, their ramp up pattern, frequency and relative percentage of transactions. Uses virtual users to generate load.

- Monitoring Tools: These tools continuously analyze, verify and report on usage of specific system resources and give warnings of possible service problems

6. Tools for specific testing needs:

- Data Quality Assessment: Used in data conversion/migration projects where data quality would be the main concern.

No comments:

Post a Comment